LLAMA 4 Unveiled: 10 Million Context Window & Revolutionary AI Performance

Usama Navid

AI and Automation Expert

Breaking News: Meta Releases LLAMA 4 With Industry-Leading Capabilities

Meta has officially released LLAMA 4, their newest suite of open-source AI models that promises to revolutionize the AI landscape. The announcement confirms that open-source AI is now competing directly with—and in some cases outperforming—proprietary models from major players like Google and OpenAI.

Key Highlight: LLAMA 4 Scout features an unprecedented 10 million token context window, possibly making it the first publicly available model with such extensive context handling capabilities.

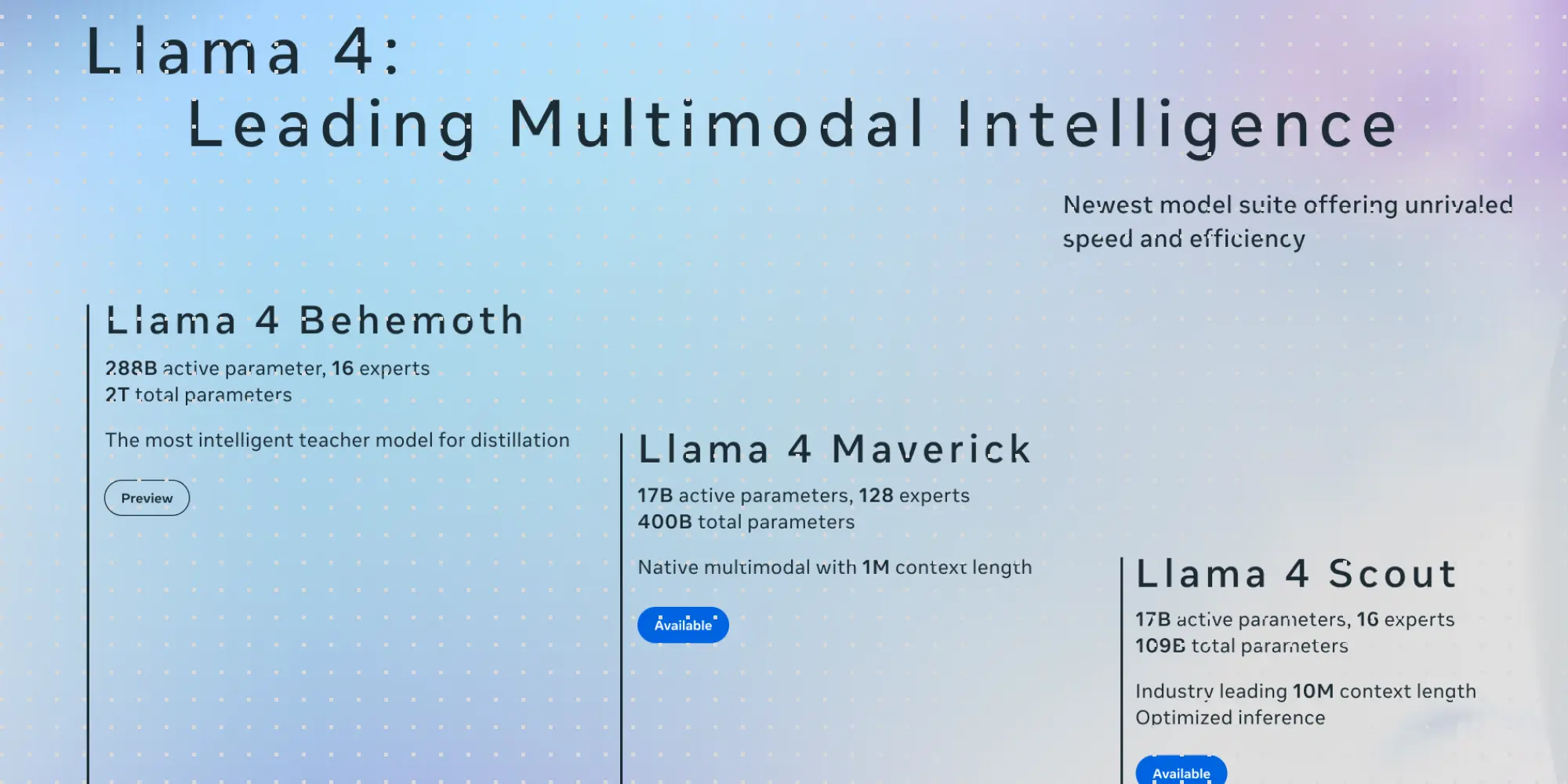

The LLAMA 4 Family: Three Powerful Variants

Meta has introduced three LLAMA 4 variants, each designed for different use cases and computational requirements:

1. LLAMA 4 Scout

- Active Parameters: 17 billion

- Total Parameters: 109 billion (16 experts)

- Context Length: Industry-leading 10 million tokens

- Hardware Requirements: Runs on a single H100 GPU

- Performance: Outperforms Gemma 3, Gemini 2.0, Flashlight, and Mistral 3.1

- Specialty: Best multimodal model that fits on a single H100 GPU

2. LLAMA 4 Maverick

- Active Parameters: 17 billion

- Total Parameters: 400 billion (128 experts)

- Context Length: 1 million tokens

- Performance: Beats GPT-4o, Gemini 2.0 Flash across benchmarks

- Pricing Efficiency: 50¢ per million tokens (compared to GPT-4o's $4.38)

- Benchmarks: Achieved 1417 on LM Arena

- Specialty: Native multimodal capabilities with best-in-class performance-to-cost ratio

3. LLAMA 4 Behemoth (Coming Soon)

- Active Parameters: 288 billion

- Total Parameters: 2 trillion (16 experts)

- Status: Still under training

- Performance: Already outperforms GPT-4.5, Claude 3.7, and Gemini 2.0 Pro on several STEM benchmarks

- Release: More details to be announced soon

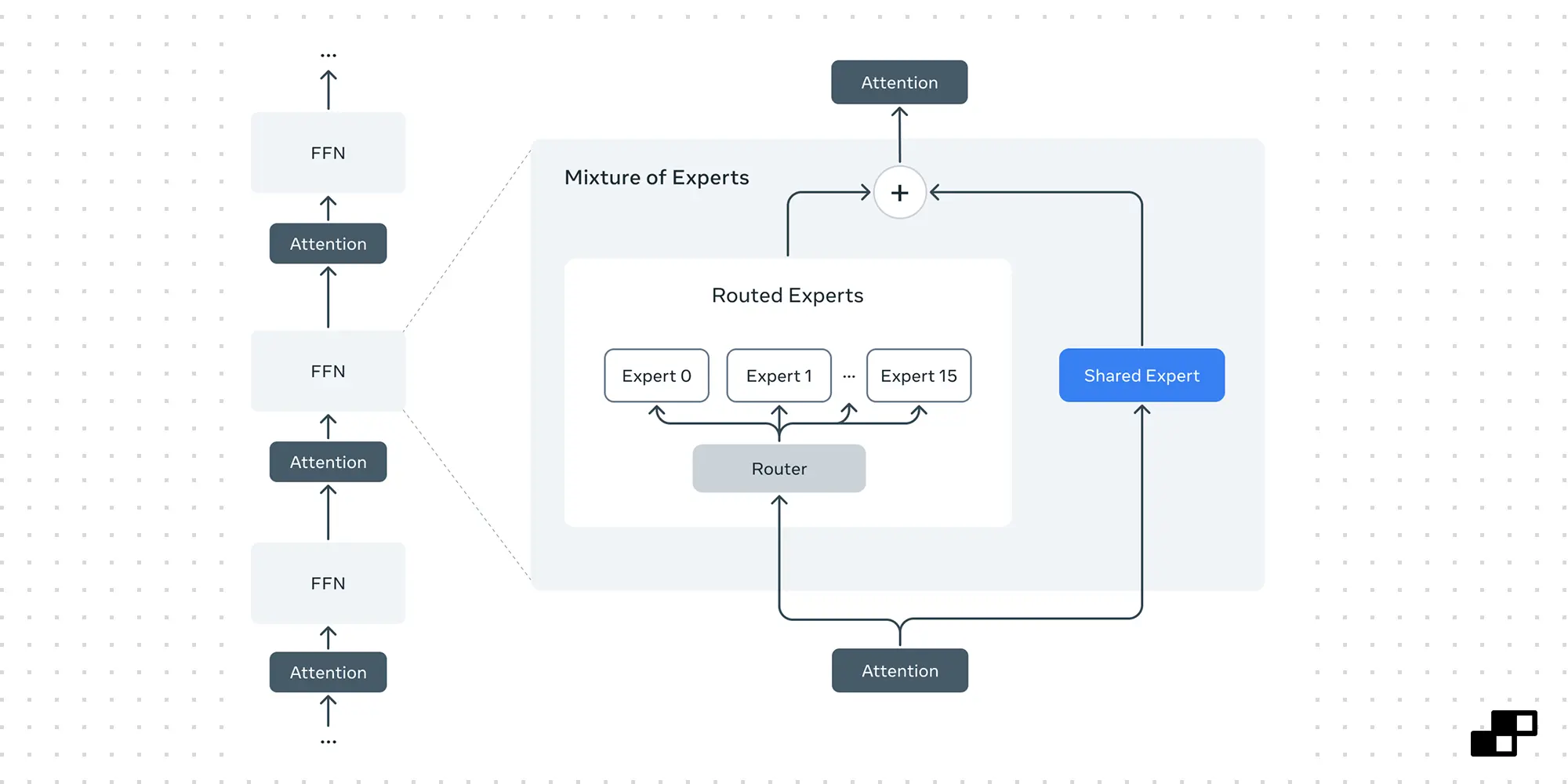

Mixture of Experts Architecture

LLAMA 4 leverages a Mixture of Experts (MoE) architecture rather than traditional dense transformer models. In this approach:

- Each token gets routed to specific experts

- Not all parameters are active simultaneously (only 17B of the 109B-400B are active per token)

- This architecture enables more efficient inference while maintaining high performance

Benchmark Performance Highlights

LLAMA 4 Maverick shows impressive performance across several key benchmarks:

- Math Vista: Outperforms Gemini 2.0 Flash

- Chart QA: Better than Gemini 2.0 Flash

- Doc VQA: Surpasses even GPT-4o

- Live Codebench: Outperforms both GPT-4o and DeepSeek V3.1

Cost Efficiency

Meta emphasizes LLAMA 4's cost efficiency compared to competitors:

| Model | Cost per 1M Tokens | Notes |

|---|---|---|

| LLAMA 4 Maverick | $0.20-0.50 | Distributed inference |

| LLAMA 4 Maverick | $0.30-0.50 | Single H100 |

| DeepSeek V3.1 | $0.50 | Similar performance |

| Gemini 2.0 Flash | $1.70 | Higher cost |

| GPT-4o | $4.38 | Highest cost |

How to Access LLAMA 4

The models are available through several channels:

- Direct Download: Visit llama.com to fill out a form and receive a download link (limited to 5 downloads within 48 hours)

- Meta AI Interface: Try it via Meta AI in WhatsApp, Messenger, or Instagram Direct

- Web Interface: Access through meta.ai

Available Model Variants

Users can download both pre-trained base models and instruction-tuned variants:

- Pre-trained weights: Scout (17B/16E) and Maverick (17B/128E)

- Instruction-tuned weights: Standard precision and FP8 (reduced precision for easier deployment)

Licensing Considerations

It's worth noting that LLAMA 4 maintains the same licensing restrictions as previous versions, limiting use for services with more than 700 million monthly active users.

What's Next for LLAMA?

Meta has hinted at two additional models in development:

- LLAMA 4 Reasoning: More details expected next month

- LLAMA 4 Behemoth: The massive 2 trillion parameter model still in training

Conclusion: A Milestone for Open-Source AI

LLAMA 4 represents a significant achievement for Meta and the open-source AI community. For the first time, open-source models are competing with—and in some cases exceeding—the capabilities of proprietary leaders in the small, midsize, and potentially frontier model categories.

With its unprecedented context window, competitive benchmarks, and cost efficiency, LLAMA 4 could reshape how developers and enterprises approach AI implementation, making advanced capabilities more accessible and affordable.

Stay tuned for more model releases and updates from Meta as the LLAMA 4 ecosystem continues to evolve.

Getting Started with LLAMA 4: The Official Cookbook

Meta has also released an official LLAMA Cookbook on GitHub with comprehensive examples to help developers get started quickly. The cookbook includes a notebook specifically for building with LLAMA 4 Scout that covers:

- Environment Setup: Configuration instructions for your development environment

- Loading the Model: Step-by-step guide to properly initialize LLAMA 4

- Long Context Demo: Examples showcasing the 10 million token context window

- Text Conversations: Techniques for effective text-based interactions

- Multilingual Capabilities: Working with LLAMA 4 across multiple languages

- Multimodal: Single Image Understanding: Processing and analyzing individual images

- Multimodal: Multi-Image Understanding: Working with multiple images simultaneously

- Function Calling with Image Understanding: Advanced integration of vision and function calling

You can access the cookbook at GitHub: meta-llama/llama-cookbook to start experimenting with these powerful models immediately.

Have you tried LLAMA 4 yet? Share your experiences in the comments below!

Usama Navid

AI and Automation Expert

Usama Navid is a contributor at Buildberg, sharing insights on technology and business transformation.

Related Posts

Agent2Agent Protocol: Google's New Framework for AI Collaboration

Google Cloud Next 2025 introduced the Agent2Agent (A2A) Protocol, a new open standard designed to revolutionize how different AI agents communicate and collaborate, regardless of their underlying models or frameworks.

Google's Agent Development Kit (ADK): A New Era for AI Agent Development

Google unveils its Agent Development Kit (ADK), a Python-based toolkit designed to revolutionize how developers build, evaluate, and deploy AI agents with seamless cloud integration.